Deploying Large Language Models with Ollama

What is Ollama?

Ollama is an open-source tool designed for large language model services, enabling users to easily deploy and utilize large-scale pre-trained models on their own hardware environments. Its primary feature is managing and deploying large language models (LLMs) inside Docker containers, allowing users to run these models locally with minimal effort. By simplifying the deployment process, Ollama ensures that users can run open-source large language models on their local environment with a single command after installation.

Prerequisites

- Complete the instance creation process, ensuring you select an image that includes

ollama-webui.

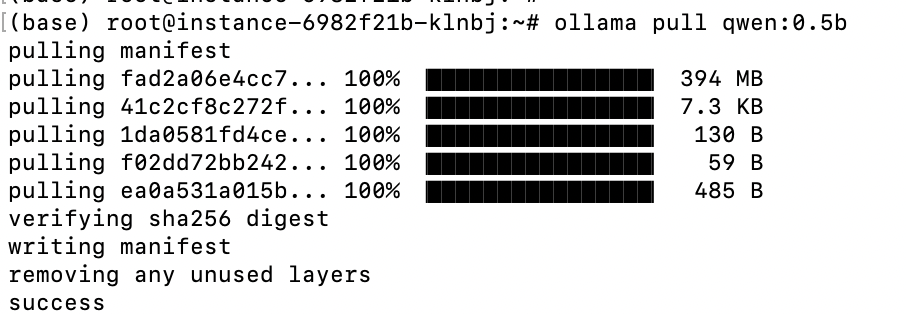

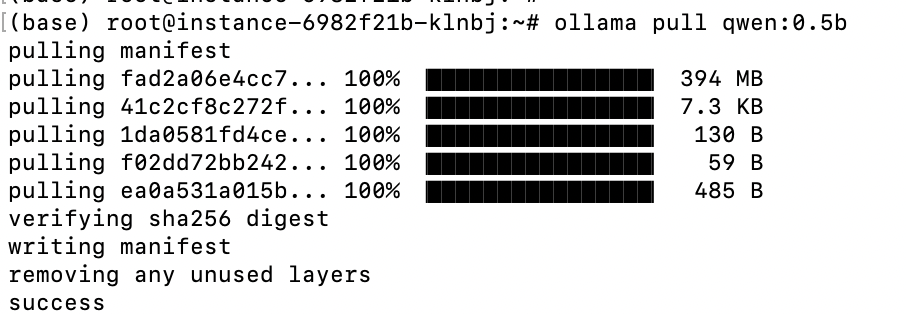

Downloading a Large Language Model

Here, we use qwen:0.5b as an example.

ollama pull qwen:0.5b

Running the Large Language Model

ollama run qwen:0.5b

At this point, you have successfully deployed a large language model on your instance and enabled knowledge-based Q&A.